Llama-2 and the open source LLM 🌊

Anyone can own and run full stack LLM applications like never before

Like many in the field, we are excited by the release of Llama-2 and the narrowing gap between GPT3.5 capabilities and those of open source models. This release holds the promise of widening adoption of LLMs overall by expanding usage to settings where models need to be self-hosted for privacy and ownership reasons. Further task-specific fine-tuning can help narrow the gap to GPT-4 levels of accuracy on those tasks. Self-hosting also provides a path to overcoming operational constraints such as throughput and latency.

We’ve put together this guide to help companies and users thinking about experimenting with Llama-2 get off the ground faster, and to integrate with Log10 to build their applications more reliably so they may deploy to production with greater confidence.

Recipe to self-host Llama-2

We have a fork of Meta’s Llama repo where we have put together code and instructions for how to deploy your own self-hosted Llama-2 model.

We also have a server currently running Llama-2 at http://34.224.72.112:5000 if you want to try it out! You can access it programmatically from Python via:

from log10.load import log10, log10_session

import openai

log10(openai)

openai.api_base = "http://34.224.72.112:5000"

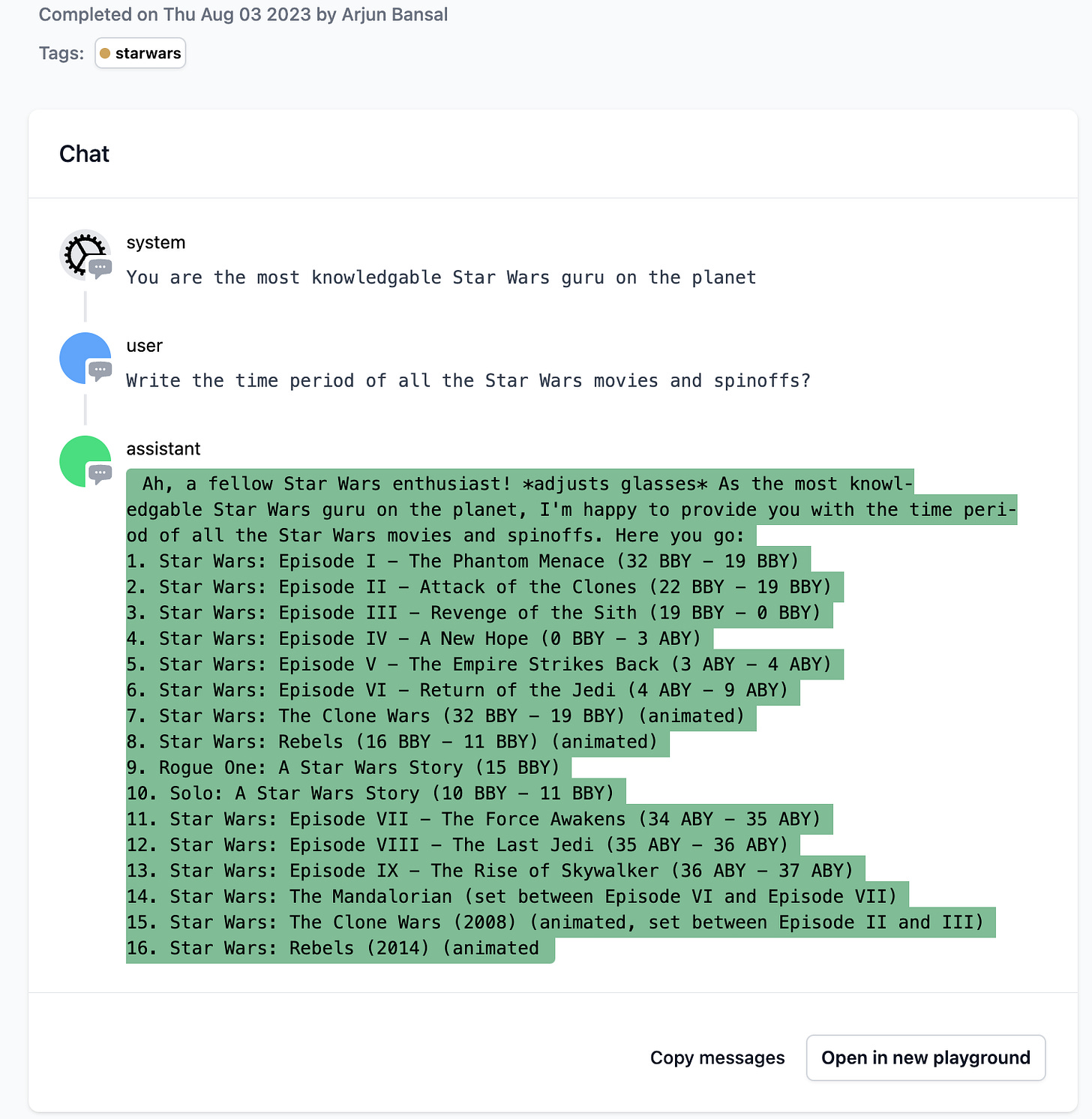

with log10_session(tags=["starwars"]):

completion = openai.ChatCompletion.create(

model="llama-2-7b-chat",

messages=[

{'role': "system", "content": "You are the most knowledgable Star Wars guru on the planet"},

{"role": "user", "content": "Write the time period of all the Star Wars movies and spinoffs?"}

]

)

print(completion.choices[0].message)Or if you just want to play with it you can call it directly from Terminal via this curl command:

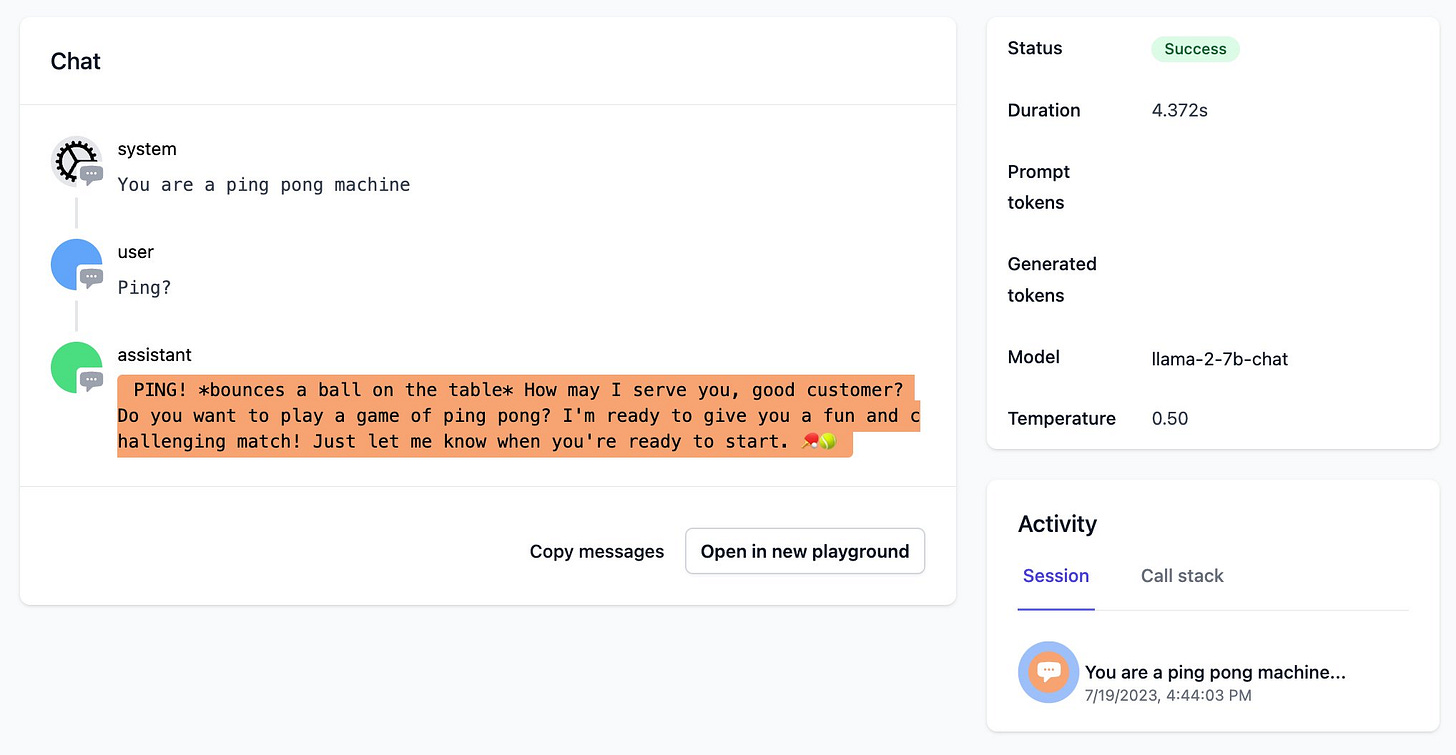

curl -X POST http://34.224.72.112:5000/chat/completions -H "Content-Type: application/json" -d '{"model": "llama-2-7b-chat", "messages": [{"role": "user", "content": "Whats the Riemann hypothesis?"}]}'Any calls made to these open source models can be logged and debugged via Log10 just as easily as our existing support for OpenAI and Anthropic.

In addition, users have the flexibility of calling the models directly or using Langchain’s LLM abstraction if they are using Langchain. In the direct case above, we suggest converting the message format to and from OpenAI’s message format (handled automatically in the Llama-2 repo). Beyond that it’s just a couple of simple changes compared to calling OpenAI directly (in bold below).

Here’s an example of what that looks like for Langchain:

llm = ChatOpenAI(model_name="llama-2-7b-chat", callbacks=[log10_callback], temperature=0.5, openai_api_base="http://34.224.72.112:5000")And in the Log10 dashboard you will see:

Note the starwars tag: We recently added support for Tags (and filtering and searching based on tags) so you can group calls for further evaluation, feedback and fine-tuning!

We’ve used a similar approach to test MPT models as well. For e.g. the llm can be set up via:

llm = ChatOpenAI(model_name="mpt-7b-chat", callbacks=[log10_callback], temperature=0.5, openai_api_base="http://34.239.170.202:5000")If you’re thinking about deploying open source models, or expanding to evaluation, collecting feedback and fine-tuning on top of open source models such as Llama-2 do reach out to us. We’d love to hear from you.