Note to readers “Arjun’s substack” is now “LLMs for Engineers”.

LLMs for Engineers is a free newsletter about helping technical people build great LLM-powered applications.

It’s created by the team behind Log10, an all-in-one platform for LLM application logging, debugging, evaluations and fine-tuning.

In each issue we share what we’ve learned, and best practices around scaling LLM-powered applications.

Large language models have seen tremendous adoption over the past year. Companies ranging from small startups to global conglomerates scrapped existing roadmaps so GPT-backed intelligence features could make it in.

Today, dev teams are experiencing a collective "now what?" moment. They’ve realized that it’s risky to deploy LLM-powered apps without understanding what "good" means, so they’re having to slow down development and are getting anxious about degrading user experiences.

User: “... only return python, do not return anything surrounding text.”

Assistant: “Sure! …”

User: 🤦To complicate matters, LLMs generate different answers even when being put in their most conservative sampling settings. A case that should be very familiar to LLM developers is unexpected variance in surrounding explanations, as shown above, where certain models end up being too polite and end up making chaining and programmatic use difficult.

The industry needs ways to establish evaluation criteria and assess LLM applications across metrics like correctness and safety. Unfortunately, we often end up talking about the importance of evaluation without concrete practices and systems to help us establish what “good” means.

llmeval: a systematic approach to evaluating LLM apps

In a previous blog post, we covered the four different types of evaluation we’re seeing developers use to test LLM applications:

Metrics-based: anything you can do with strings and metrics.

Tool-based: anything you can do by calling external tools

Model-based: calling bigger models (typically GPT-4) to be a judge of the generated output.

Human-in-the-loop: having humans being involved in offline or online evaluation, where a reviewer determines the quality of the generation.

In this blog post we introduce llmeval, a command-line tool that encompasses three of these evaluation types (i.e. metrics-based, tool-based, and model-based). It allows teams to establish an evaluation criteria for “good” and monitor LLM applications as part of the dev workflow. Most importantly, it allows teams to evolve reliable LLM apps at scale without slowing down development.

llmeval runs locally and is simple to use:

$ cd yourrepo

$ pip install llmeval

$ llmeval init # Initialize folder structure

$ llmeval run # Execute evaluation

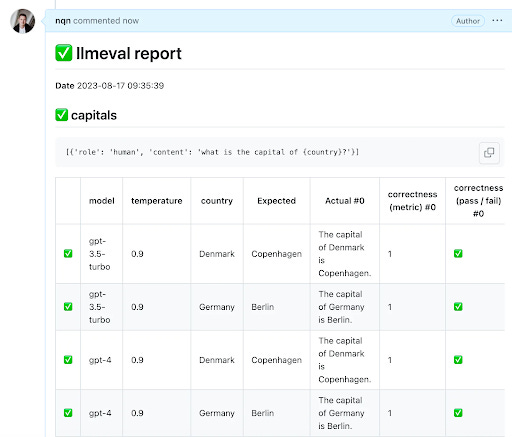

$ llmeval report # View markdown reportsllmeval works by providing a structured framework for managing prompts, tests, and results using a configuration system from Meta called Hydra. This makes llmeval very flexible, and any variable or prompt can be swept to provide a comprehensive view of how LLM applications behave in different settings.

After executing tests, llmeval generates reports summarizing pass/fail rates across your application and LLMs.

The metric logic is implemented in Python, so you can specify any desired logic along with your metrics-, tools- or model-based evaluations.

Three types of evaluation

Let’s go through an example of each type of evaluation that llmeval provides.

Metrics-based evaluations

To demonstrate prompt and test files and metrics evaluations, consider the math example:

defaults:

- tests/math

name: math

model: gpt-4-16k

temperature: 0.0

variables:

- name: a

- name: b

messages:

- role: "human"

content: "What is {a} + {b}?"With a test case like:

metrics:

- name: exact_match

code: |

metric = actual == expected

result = metric

references:

- input:

a: 4

b: 4

expected: "8"The metric variable is surfaced to the user in the report and could be a score or label. And the result variable whether the metrics passed or failed. This tends to be useful for applying thresholds.

Our metric here is a strict comparison between the expected value and the generated one. That should work, right?

Unfortunately not. Because we didn’t instruct the LLM only to output “9”, we get different results depending on the model:

GPT-3.5: 4 + 5 equals 9.

GPT-4: 9

Claude-2: 4 + 5 = 9

Fixing the original prompt to: “What is {a} + {b}? Only return the answer without any explanation” fixes the issue.

Other metrics based comparisons can be string distances, fuzzy matching (removing white spaces, special characters, capitalization) or semantic comparison using embeddings.

Tools-based evaluations

For code generating LLM applications like Natural Language (NL) to SQL, it is often useful to call the relevant tools - compilers, libraries or SDKs - directly in a controlled environment.

As metrics are written in python, you can run tools - like the Python AST parser - right in the test definition:

metrics:

- name: passes_ast

code: |

import ast

try:

ast.parse(actual.lstrip())

metric = True

except SyntaxError:

metric = False

result = metric

references:

- input:

task: "adds two numbers"

- input:

task: "finds the inverse square root of a number"Model-based evaluations

A technique that has a lot of attention and hope from the community, is model based evaluation where a much bigger model - typically GPT-4 - is used as a judge of the output from itself, or smaller models.

This tends to be useful in generative use-cases, where absolute metrics or tools can’t determine the output quality, as there may be many ways to express a correct or incorrect answer.

In llmeval, you can call out to models using existing libraries, like langchain’s evaluator library, or hand written critique prompts with examples and scoring instructions.

metrics:

- name: correctness

code: |

from langchain.evaluation import load_evaluator

evaluator = load_evaluator("labeled_criteria", criteria="correctness")

eval_result = evaluator.evaluate_strings(

input=prompt,

prediction=actual,

reference=expected,

)

metric = eval_result["score"]

result = metric > 0.5

references:

- input:

country: Denmark

expected: CopenhagenWhat to do when your tests (mostly) work?

LLMs are probabilistic machines, and even in their most predictable state will generate different answers for the same test, i.e. “flakey” tests.

To guard against turning “flakey” tests off completely, it is useful to sample tests and have thresholds that guard against LLMs degrading over time..

metrics_rollup:

name: "majority"

code: |

result = num_passes > num_failsIn this case, we just ensure that from the number of samples that llmeval carries out, the majority pass. This could be any logic (i.e. min, max, averages…), as the logic is written in python.

How to collaborate on evaluation with your teammates

llmeval runs locally and doesn’t need any accounts or logins. When teams want to check in llmeval prompts and tests, we have built an llmeval GitHub application which makes it easy to get reports right in the pull request, and block and iterate on problematic tests using github checks.

The llmeval GitHub application is still in alpha, so please reach out to founders@log10.io if you’d like to try it out.

Log10: build and ship reliable LLM-powered apps

As an industry, we’ve just started to explore what it means to develop LLM applications with confidence. We are starting to apply tried and tested software patterns and practices to LLM application development like automated testing, and discovering new categories of tooling that needs to exist to help LLM developers on their journey.

Our mission at Log10 is to provide end-to-end LLMOps for developers so they can build and ship reliable LLM apps in production. llmeval enables teams to organize their prompts and tests in a central repository, set the criteria for “good” using a variety of evaluation types, and systematically run tests and monitor results. Developers can continue the journey to ship reliable LLM apps with powerful tools for debugging, model comparison, and fine-tuning.

If you’re interested in LLM evaluation and building more accurate and reliable LLM-powered apps, I’d love to chat! You can reach me at nik@log10.io